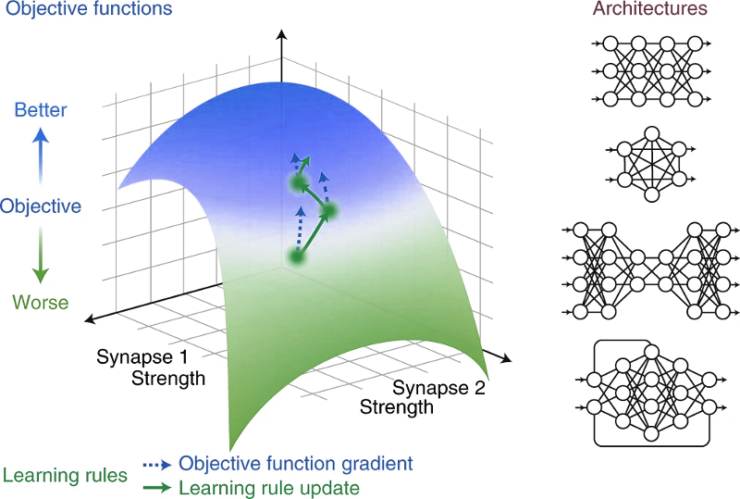

Our perspective paper on how systems neuroscience can benefit from deep learning was published today. In work led by Blake Richards, Tim Lillicrap, and Konrad Kording, we argue that focusing on the three core elements used to design deep learning systems — network architecture, objective functions, and learning rules — offers a fresh approach to understanding the computational principles of neural circuits.

Paper

Richards, B.A., Lillicrap, T.P., Beaudoin, P., Bengio, Y., Bogacz, R., Christensen, A., Clopath, C., Costa, R.P., Berker, A. de, Ganguli, S., Gillon, C.J., Hafner, D., Kepecs, A., Kriegeskorte, N., Latham, P., Lindsay, G.W., Miller, K.D., Naud, R., Pack, C.C., Poirazi, P., Roelfsema, P., Sacramento, J., Saxe, A., Scellier, B., Schapiro, A.C., Senn, W., Wayne, G., Yamins, D., Zenke, F., Zylberberg, J., Therien, D., Kording, K.P., 2019. A deep learning framework for neuroscience. Nat Neurosci 22, 1761–1770. https://doi.org/10.1038/s41593-019-0520-2