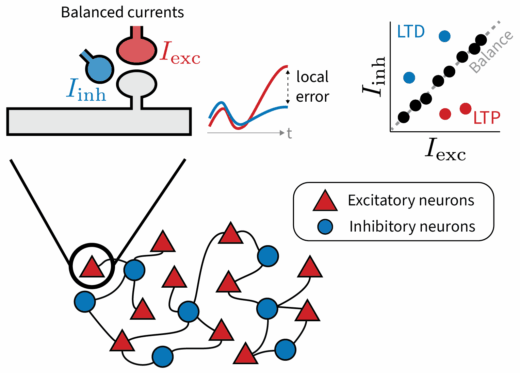

Why does the brain maintain such precise excitatory-inhibitory balance? Our new preprint led by Julian Rossbroich explores a provocative idea: Small, targeted deviations from this balance may serve a purpose: to encode local error signalsContinue reading

Category: publications

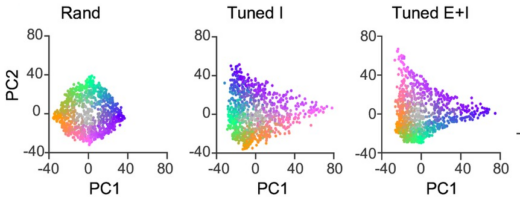

Paper: Geometry and dynamics of representations in a precisely balanced memory network

Claire’s article “Geometry and dynamics of representations in a precisely balanced memory network related to olfactory cortex” was published. We built a spiking network model of the telencephalic area Dp in adult zebrafish, which isContinue reading

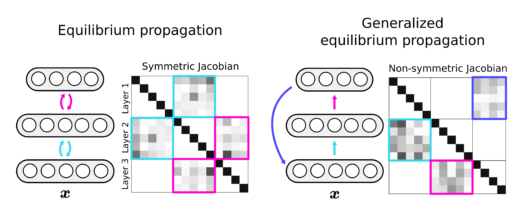

Improving equilibrium propagation without weight symmetry through Jacobian homeostasis

We are happy our new paper “Improving equilibrium propagation (EP) without weight symmetry through Jacobian homeostasis,” led by Axel accepted at ICLR 2024. Preprint: https://arxiv.org/abs/2309.02214Code: https://github.com/Laborieux-Axel/generalized-holo-ep EP prescribes a local learning rule and uses recurrentContinue reading

Paper: Disinhibitory neuronal circuits are ideally poised to control the sign of synaptic plasticity

In Julian’s new paper “Dis-inhibitory neuronal circuits can control the sign of synaptic plasticity“ accepted at NeurIPS we look at how to reconcile normative theories of gradient-based learning in the brain with phenomenological models ofContinue reading

Paper: Implicit variance regularization in non-contrastive SSL

New paper from the lab accepted at NeurIPS: “Implicit variance regularization in non-contrastive SSL.” In our article, first-authored by Manu and Axel, we add further understanding to how non-contrastive self-supervised learning (SSL) methods avoid collapse.Continue reading

Paper: Latent Predictive Learning

Our paper “The combination of Hebbian and predictive plasticity learns invariant object representations in deep sensory networks” is now published in Nature Neuroscience. https://www.nature.com/articles/s41593-023-01460-y Sensory networks in our brain represent environmental objects as points onContinue reading

New preprint: Improving equilibrium propagation without weight symmetry

I am happy to announce our new preprint on “Improving equilibrium propagation (EP) without weight symmetry through Jacobian homeostasis,” led by Axel. https://arxiv.org/abs/2309.02214 EP prescribes a local learning rule and uses recurrent dynamics for creditContinue reading

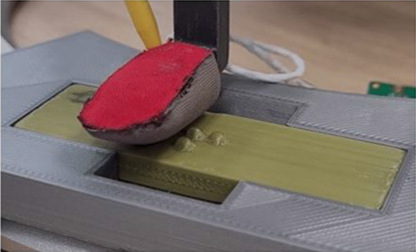

A tactile robotic finger tip and a spiking neural network read Braille

This fun project started as a summer student project at Telluride 2021 led and advised by Chiara Bartolozzi and myself. An incredibly motivated group of summer students taught a robotic fingertip with tactile sensors andContinue reading

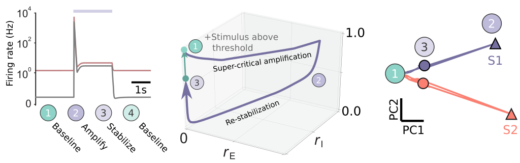

Paper: Nonlinear transient amplification in recurrent neural networks with short-term plasticity

Update (21.12.2021): Now published https://elifesciences.org/articles/71263 I am pleased to share our new preprint: “Nonlinear transient amplification in recurrent neural networks with short-term plasticity” led by Yue Kris Wu in which we study possible mechanisms forContinue reading

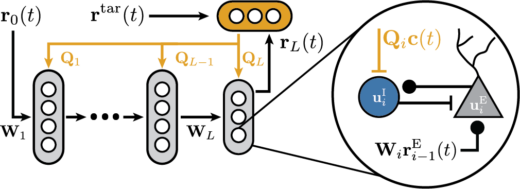

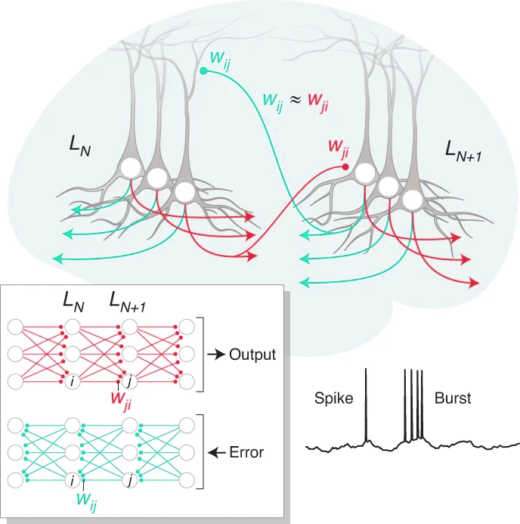

Paper: Burst-dependent synaptic plasticity can coordinate learning in hierarchical circuits

We’re excited to see this published. A new take on spatial credit assignment in cortical circuits based on Richard Naud’s idea on burst multiplexing. A truly collaborative effort lead by Alexandre Payeur and Jordan GuerguievContinue reading