We’re happy to share our new preprint “Understanding cortical computation through the lens of joint-embedding predictive architectures” led by Atena and Manu 🚀

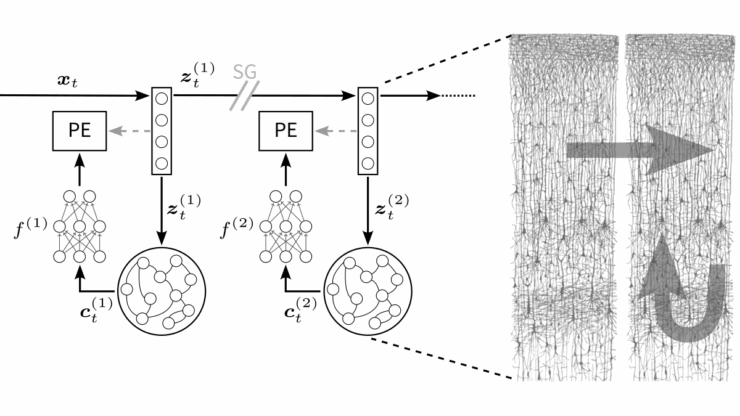

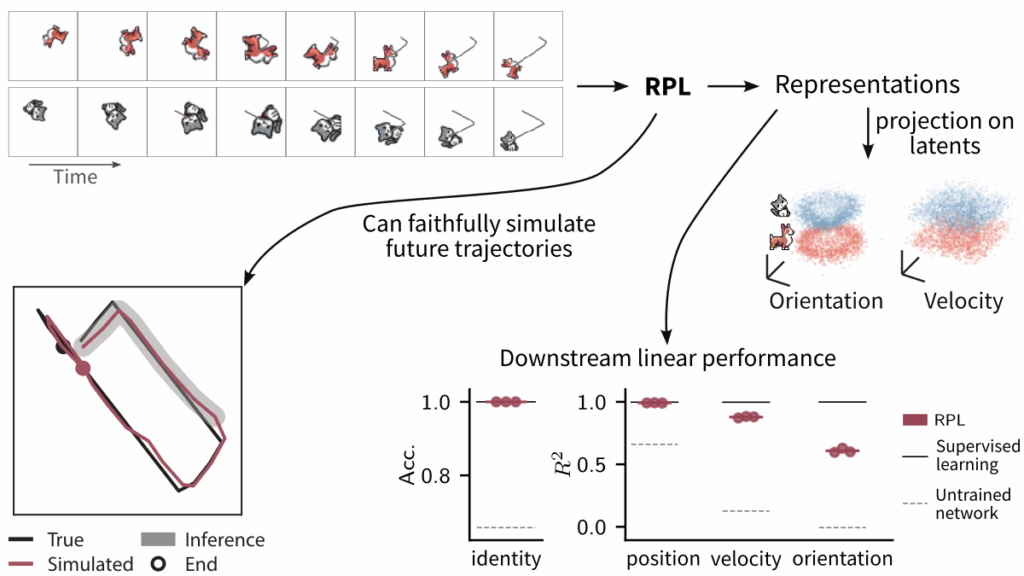

We looked into the question how the cortex learns to represent things and their motion without requiring labels and reconstructing sensory stimuli. To that end, we developed a circuit-centric recurrent predictive learning (RPL) model based on JEPAs.

Preprint: https://doi.org/10.1101/2025.11.25.690220

Code: https://github.com/fmi-basel/recurrent-predictive-learning

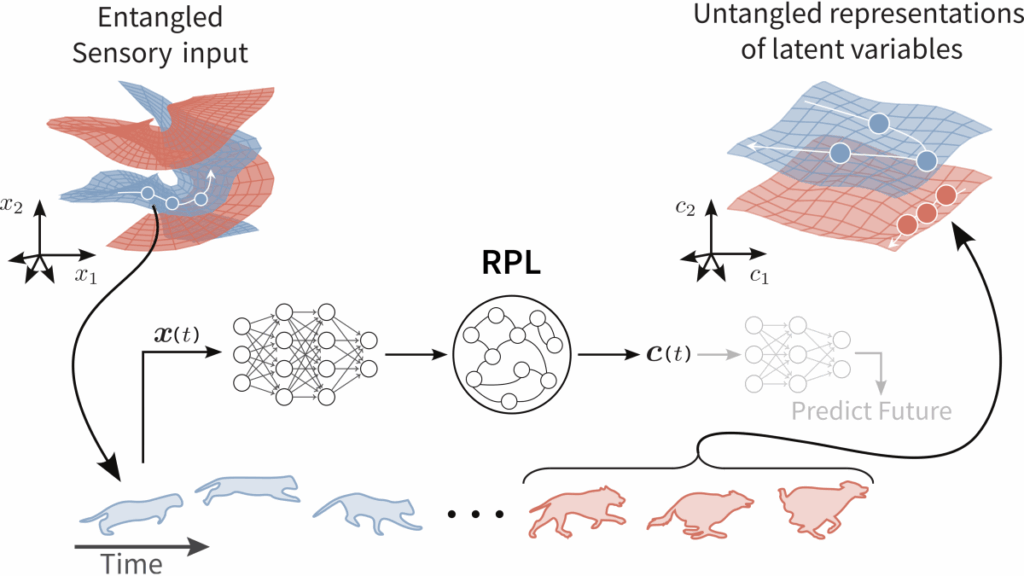

RPL operates entirely in latent space, avoiding the anatomical issues of predictive coding models that compute prediction errors in input space. Instead, the network predicts future internal representations through a specific recurrent circuit structure.

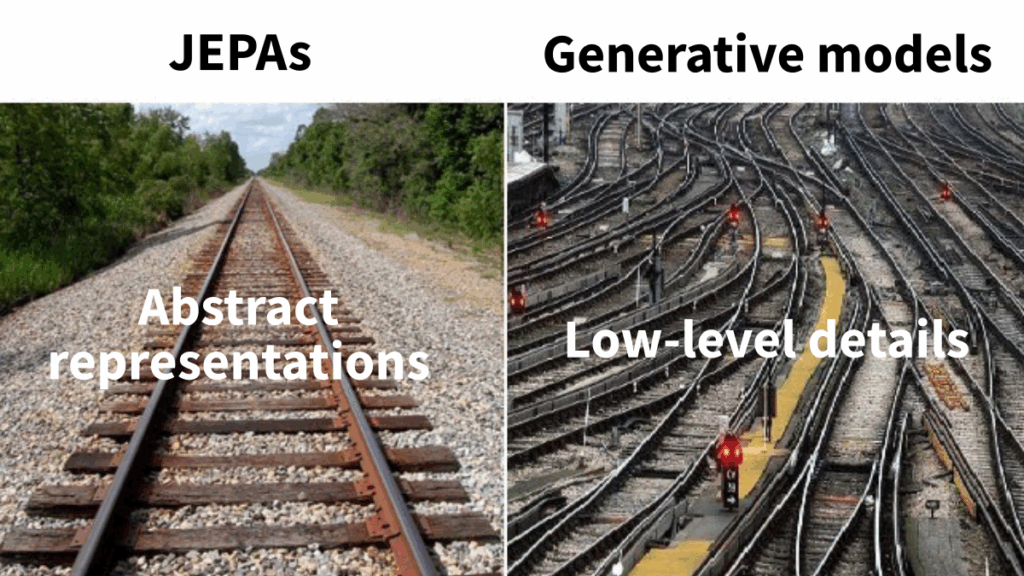

Recent studies indicate that, aside from plausibility, representation-space predictive models like JEPAs also learn more abstract representations than input-space generative models, which tend to focus on low-level details.

From raw video streams and without supervision, RPL learns: invariant object identity, equivariant motion variables (position, velocity, orientation, etc.), and a world model that allows simulating plausible motion trajectories entirely in latent space.

Importantly, RPL captures representational motifs across multiple species and cortical areas: On the one hand, successor-like structures resembling those in human V1. On the other hand, its abstract sequence representations are comparable to macaque PFC.

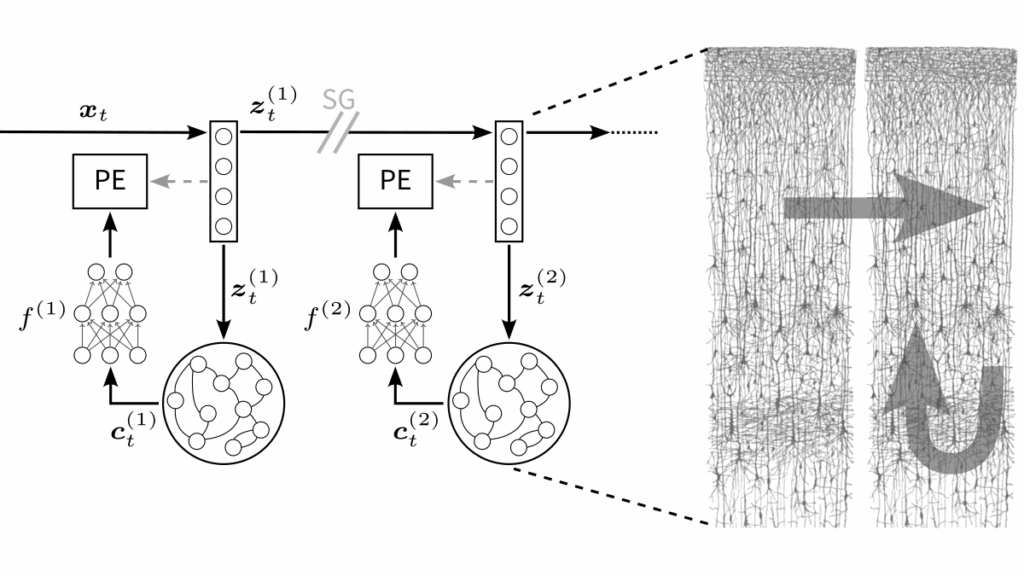

Finally, we build a hierarchical JEPA version of our model and outline how its architecture could map onto cortical microcircuits, toward a predictive-processing framework with mechanistic links to neuroanatomy.