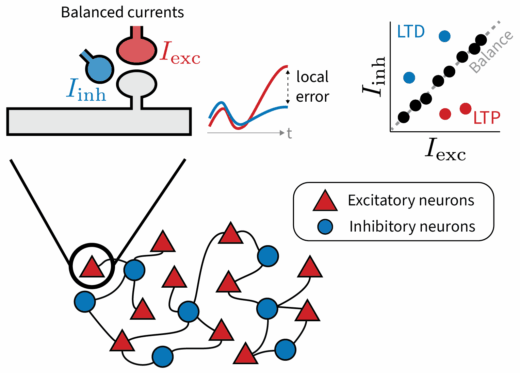

Why does the brain maintain such precise excitatory-inhibitory balance? Our new preprint led by Julian Rossbroich explores a provocative idea: Small, targeted deviations from this balance may serve a purpose: to encode local error signalsContinue reading

Tag: preprint

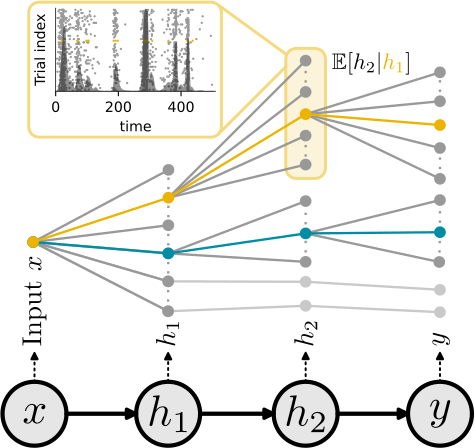

Elucidating the theoretical underpinnings of surrogate gradient learning in spiking neural networks

Surrogate gradients (SGs) are empirically successful at training spiking neural networks (SNNs). But why do they work so well, and what is their theoretical basis? In our new preprint led by Julia, we answer theseContinue reading

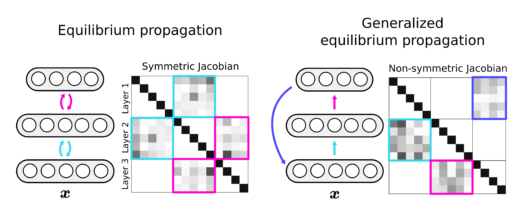

New preprint: Improving equilibrium propagation without weight symmetry

I am happy to announce our new preprint on “Improving equilibrium propagation (EP) without weight symmetry through Jacobian homeostasis,” led by Axel. https://arxiv.org/abs/2309.02214 EP prescribes a local learning rule and uses recurrent dynamics for creditContinue reading

Preprint: The remarkable robustness of surrogate gradient learning for instilling complex function in spiking neural networks

We just put up a new preprint https://www.biorxiv.org/content/10.1101/2020.06.29.176925v1 in which we take a careful look at what makes surrogate gradients work. Spiking neural networks are notoriously hard to train using gradient-based methods due to theirContinue reading

Preprint: The remarkable robustness of surrogate gradient learning for instilling complex function in spiking neural networks

We just put up a new preprint https://www.biorxiv.org/content/10.1101/2020.06.29.176925v1 in which we take a careful look at what makes surrogate gradients work. Spiking neural networks are notoriously hard to train using gradient-based methods due to theirContinue reading

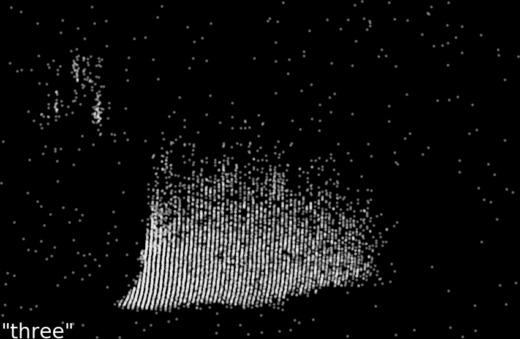

Preprint: The Heidelberg spiking datasets for the systematic evaluation of spiking neural networks

Update (2020-12-30) Now published: Cramer, B., Stradmann, Y., Schemmel, J., and Zenke, F. (2020). The Heidelberg Spiking Data Sets for the Systematic Evaluation of Spiking Neural Networks. IEEE Transactions on Neural Networks and Learning SystemsContinue reading

The temporal paradox of Hebbian learning and homeostatic plasticity

I am happy that our article on “The temporal paradox of Hebbian learning and homeostatic plasticity” was just published in Current Opinion in Neurobiology (full text). This article essentially concisely presents the main arguments forContinue reading