I am delighted to get the chance to present my work on learning in spiking neural networks on Tuesday, 15th of May 2018 at 10:15am at TU Berlin.

Title: What can we learn about synaptic plasticity from spiking neural network models?

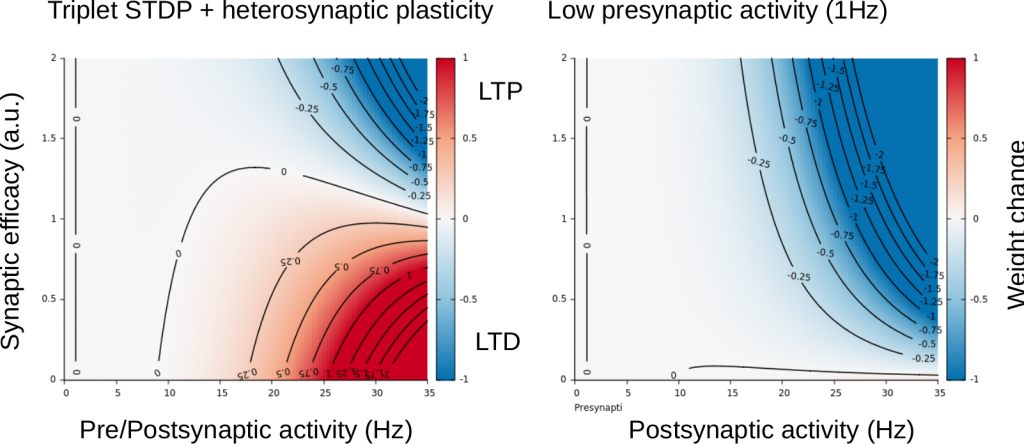

Abstract: Long-term synaptic changes are thought to be crucial for learning and memory. To achieve this feat, Hebbian plasticity and slow forms of homeostatic plasticity work in concert to wire together neurons into functional networks. This is the story you know. In this talk, however, I will tell a different tale. Starting from the iconic notion of the Hebbian cell assembly, I will show the challenges which different forms of synaptic plasticity have to meet to form and stably maintain cell assemblies in a network model of spiking neurons. Constantly teetering on the brink of disaster, a diversity of synaptic plasticity mechanisms must work in symphony to avoid exploding network activity and catastrophic memory loss. Specifically, I will explain why stable circuit function requires rapid compensatory processes, which act on much shorter timescales than homeostatic plasticity and discuss possible mechanisms. In the second part of my talk, I will revisit the problem of supervised learning in deep spiking neural networks. Specifically, I am going to introduce the SuperSpike trick to derive surrogate gradients and show how it can be used to build spiking neural network models which solve difficult tasks by taking full advantage of spike timing. Finally, I will show that plausible approximations of such surrogate gradients naturally lead to a voltage-dependent three-factor Hebbian plasticity rule.