We are excited about another year of SNUFA our friendly focus meeting on spiking neural networks that solve real-world problems. Check out our work on SNNs in biologically plausible olfactory memory networks, decoding BMI data,Continue reading

Author: fzenke

Tengjun, Julia, and Julian score first prize at the BiOCAS neural decoding challenge

We are proud of our team’s achievement of winning the first prize at the BiOCAS grand neural decoding challenge for their work of decoding cortical spike trains with artificial spiking neural networks. Congratulations! Liu, T.,Continue reading

Tengjun, Julia, and Julian win the Ruth Chiquet prize

Congratulations to Tengjun, Julia, and Julian for being awarded the Ruth Chiquet Prize! The award recognizes their work on using spiking neural networks to decode brain signals. “The committee was impressed by the elegance ofContinue reading

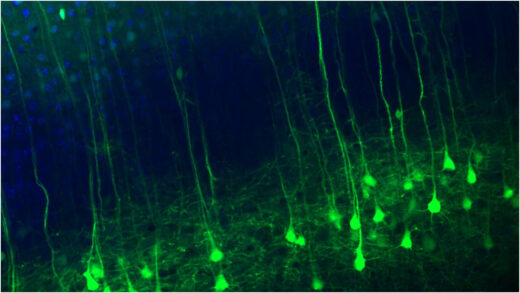

Hiring: Exploring the circuit mechanisms for learning and simulating a world model

Understanding how the brain constructs and simulates world models is a fundamental challenge in neuroscience. We are looking for someone to join our crew to explore the neuronal circuit mechanisms for learning and simulating worldContinue reading

Discussing science at les Rasses

Julia gave a nice talk on spiking nets at a focus meeting at Les Rasses with the obligatory walk up the Chasseron. Thanks to Christian Lüscher and Andreas Lüthi for organizing the meeting. Photo byContinue reading

Kavli Neuroscience Workshop

Julian and Friedemann very much enjoyed the Kavli Neuroscience extended workshop “Theories of neural computations in the era of large-scale recordings” in Trondheim. Thanks again to all the organizers and participants for a truly stimulatingContinue reading

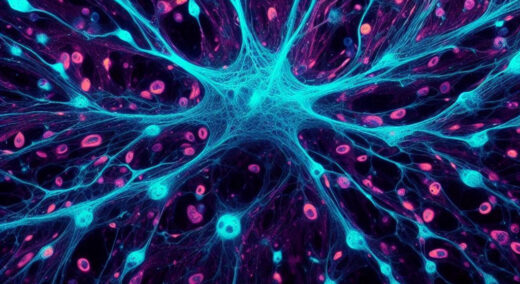

Hiring: Deep learning-based design of synthetic regulatory elements for cell-type-specific targeting

Understanding the molecular mechanisms that drive cell-type-specific gene expression in the brain is crucial for advancing circuit neuroscience. The wealth of genome-wide information on gene regulation available today puts us in the position to decipherContinue reading

High school students build neuronal circuits

At the annual Tage der Genforschung event, the FMI opens its doors to interested high school students from the neighboring cities. This year team members from our group showed the students how to build simpleContinue reading

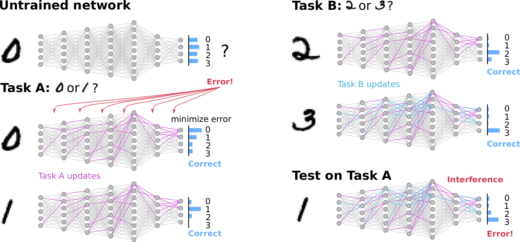

Preprint: Theories of synaptic memory consolidation and intelligent plasticity for continual learning

We are happy to present our new preprint:Zenke, F., Laborieux, A., 2024. Theories of synaptic memory consolidation and intelligent plasticity for continual learning. https://arxiv.org/abs/2405.16922 It is a book chapter in the making which covers theContinue reading