Excited that our preprint “Improved multitask learning through synaptic intelligence” just went life on the arXiv (https://arxiv.org/abs/1703.04200). This article, by Ben Poole, Surya and myself, illustrates the benefits of complex synaptic dynamics on continual learning in neural networks. Here a short summary why I am particularly excited about this work with focus on its neuroscience side.

“How much should I care?” This is the question a synapse has to ask itself when it comes to updating its efficacy to form a new memory. If the synapse goes “all in“ and devotes itself fully to forming the new memory, it might decide to substantially change its weight. Old memories, which may have been encoded using the same synaptic weight, might be damaged or overwritten entirely by this process. However, if a synapse clings on too tightly to its own past and does not change its weight by a lot, it becomes difficult to form new memories. This dichotomy has been termed the plasticity stability dilemma. To overcome this dilemma, several complex synapse and plasticity models have been proposed over the years (Fusi et al., 2005; Clopath et al., 2008; Barrett et al., 2009; Lahiri and Ganguli, 2013; Ziegler et al., 2015; Benna and Fusi, 2016). Most of these models implement intricate hidden synaptic dynamics on multiple timescales, which in some cases are augmented by some form of neuromodulatory control. However, these models are typically studied in abstract analytical frameworks in which their direct impact on memory performance and learning of real-world tasks is often hard to measure. In our article, we have now taken a step towards looking at the functional benefits of complex synaptic models in network models which learn to perform real-world tasks.

In the manuscript, we investigated the problem of multi-task learning in which a neural network has to solve a standard classification task. But, instead of having access to all the training data at once, the network is trained peu-a-peu on one sub-task at a time. For example, suppose you want to learn the (MNIST) digits. Instead of giving you all the labels, your supervisor only shows you zeros and ones at first. Then, the next day, you get to see twos and threes and so forth … when you train a standard MLP on these tasks one by one, by the time you get to 8 and 9, you will have forgotten about 0 and 1. In machine learning this problem is called catastrophic forgetting (McCloskey, M. & Cohen, N., 1989; Srivastava et al., 2013; Goodfellow et al., 2013), but the problem is very much related to the plasticity stability dilemma introduced above. In our article, we propose a straight-forward mechanism by which each synapse “remembers” how important its is for achieving good performance on a given set of tasks. The more important a synapse “thinks” it is for storing past memories, the more reluctant it becomes in updating its efficacy to store new memories. It turns out that when all synapses in a network do that, it becomes a relatively simple undertaking for a network to learn new things while also being good at remembering the old stuff.

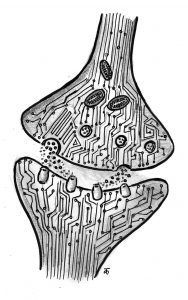

The mechanism has a simple yet beautiful connection to computing the path integral over the gradient field. The details do not matter, but to give you the gist of it: What’s really cool and somewhat surprising is that for gradient-based learning schemes, a synapse can estimate its own contribution to improvements of the global objective based on purely local measurements. All a synapse needs to have access to is its own gradient component and its own weight update. Additionally, the synapse needs to have some memory about its recent past. To implement these dynamics, the synaptic state space needs to be 3–4 dimensional depending on how you count. From a biological point of view, this dimensionality does not seem too unreasonable given the chemical and molecular complexity of real synapses (Redondo and Morris, 2011). I find this pretty neat, but of course there are plenty of open questions left for future studies. For instance, in the manuscript we use backprop to train our networks. That makes the gradient itself a highly nonlocal quantity and for multilayer networks its still pretty much unclear how this gradient gets to the synapse. But one step at a time. Figuring out how credit assignment in the deep layers in the brain is achieved is still a pretty much open problem, but a lot of people are working on that, so we can hope for some progress on that topic soon.

Anyway, I am looking forward to feedback and suggestions 🙂

Bibliography

- Barrett, A.B., Billings, G.O., Morris, R.G.M., and van Rossum, M.C.W. (2009). State Based Model of Long-Term Potentiation and Synaptic Tagging and Capture. PLoS Comput Biol 5, e1000259.

- Benna, M.K., and Fusi, S. (2016). Computational principles of synaptic memory consolidation. Nat Neurosci advance online publication.

- Clopath, C., Ziegler, L., Vasilaki, E., Büsing, L., and Gerstner, W. (2008). Tag-Trigger-Consolidation: A Model of Early and Late Long-Term-Potentiation and Depression. PLoS Comput Biol 4, e1000248.

- Fusi, S., Drew, P.J., and Abbott, L.F. (2005). Cascade models of synaptically stored memories. Neuron 45, 599–611.

- Goodfellow, I.J., Mirza, M., Xiao, D., Courville, A., and Bengio, Y. (2013). An Empirical Investigation of Catastrophic Forgetting in Gradient-Based Neural Networks. arXiv:1312.6211 [Cs, Stat].

- Lahiri, S., and Ganguli, S. (2013). A memory frontier for complex synapses. In Advances in Neural Information Processing Systems, (Tahoe, USA: Curran Associates, Inc.), pp. 1034–1042.

- McCloskey, M., and Cohen, N.J. (1989). Catastrophic Interference in Connectionist Networks: The Sequential Learning Problem. In Psychology of Learning and Motivation, G.H. Bower, ed. (Academic Press), pp. 109–165.

- Redondo, R.L., and Morris, R.G.M. (2011). Making memories last: the synaptic tagging and capture hypothesis. Nat Rev Neurosci 12, 17–30.

- Srivastava, R.K., Masci, J., Kazerounian, S., Gomez, F., and Schmidhuber, J. (2013). Compete to Compute. In Proceedings of the 26th International Conference on Neural Information Processing Systems, (USA: Curran Associates Inc.), pp. 2310–2318.

- Ziegler, L., Zenke, F., Kastner, D.B., and Gerstner, W. (2015). Synaptic Consolidation: From Synapses to Behavioral Modeling. J Neurosci 35, 1319–1334.

One thought on “Role of complex synapses in continual learning”