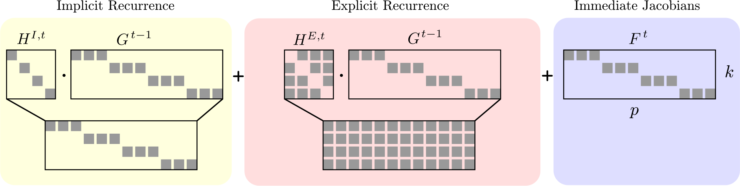

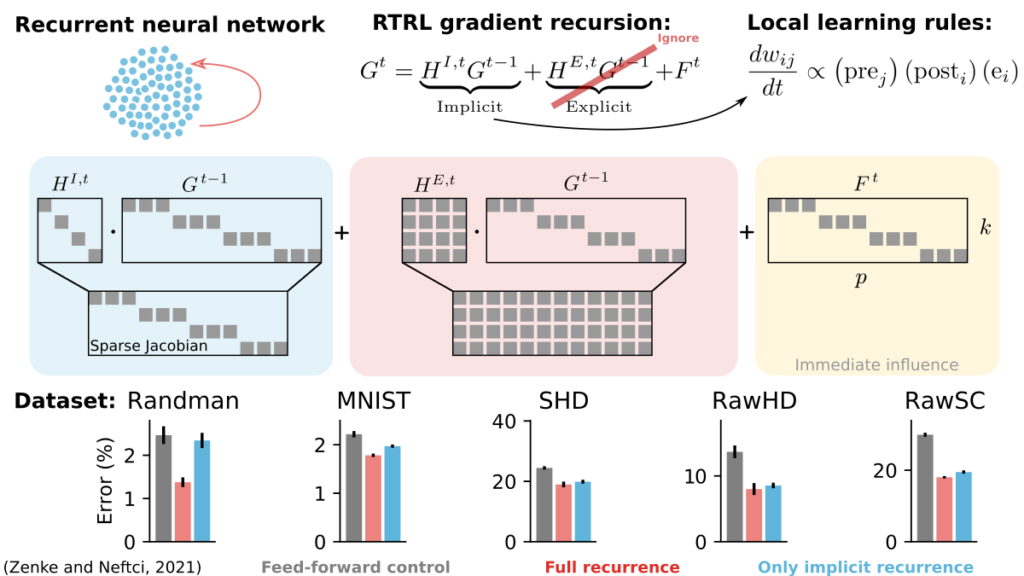

I’m happy to share our new overview paper (https://ieeexplore.ieee.org/document/9317744, preprint: arxiv.org/abs/2010.11931) on brain-inspired learning on neuromorphic substrates in (spiking) recurrent neural networks. We systematically analyze how the combination of Real-Time-Recurrent Learning (RTRL; Williams and Zipser, (1989)) with the implicit recurrence inherent to biologically inspired neuron models suggests sparse approximations that yield computationally efficient online learning rules. This analysis links RTRL with a plethora of recent bio-plausible online learning rules, including e-Prop (Bellec et al., 2020), OSTL (Bohnstingl et al., 2020), RFLO (Murray, 2019), DECOLLE (Kaiser et al., 2020), and SuperSpike (Zenke and Ganguli, 2018). Further, we discuss these rules in the context of alternative variational learning approaches and other learning schemes such as Spike Force (Nicola and Clopath, 2017) and FOLLOW (Gilra and Gerstner, 2017). Finally, our paper sketches exciting future research directions at the confluence of computational neuroscience, neuromorphic engineering, and machine learning via the emerging field of sparseness in recurrent neural networks (Menick et al., 2020).

References

- Bellec, G., Scherr, F., Subramoney, A., Hajek, E., Salaj, D., Legenstein, R., and Maass, W. (2020). A solution to the learning dilemma for recurrent networks of spiking neurons. Nature Communications 11, 3625. https://www.nature.com/articles/s41467-020-17236-y

- Bohnstingl, T., Woźniak, S., Maass, W., Pantazi, A., and Eleftheriou, E. (2020). Online spatio-temporal learning in deep neural networks. ArXiv:2007.12723 [Cs, Stat]. https://arxiv.org/abs/2007.12723

- Gilra, A., and Gerstner, W. (2017). Predicting non-linear dynamics by stable local learning in a recurrent spiking neural network. ELife 6, e28295. https://doi.org/10.7554/eLife.28295

- Kaiser, J., Mostafa, H., and Neftci, E. (2020). Synaptic Plasticity Dynamics for Deep Continuous Local Learning (DECOLLE). Front. Neurosci. 14. https://www.frontiersin.org/articles/10.3389/fnins.2020.00424

- Menick, J., Elsen, E., Evci, U., Osindero, S., Simonyan, K., and Graves, A. (2020). A Practical Sparse Approximation for Real Time Recurrent Learning. ArXiv:2006.07232 [Cs, Stat]. https://arxiv.org/abs/2006.07232

- Murray, J.M. (2019). Local online learning in recurrent networks with random feedback. ELife 8, e43299. https://elifesciences.org/articles/43299

- Nicola, W., and Clopath, C. (2017). Supervised learning in spiking neural networks with FORCE training. Nat Commun 8, 2208. https://www.nature.com/articles/s41467-017-01827-3

- Williams, R.J., and Zipser, D. (1989). A learning algorithm for continually running fully recurrent neural networks. Neural Computation 1, 270–280.

- Zenke, F., and Ganguli, S. (2018). SuperSpike: Supervised Learning in Multilayer Spiking Neural Networks. Neural Comput 30, 1514–1541. https://arxiv.org/abs/1705.11146