We are happy that Tengjun, another lab alumnus graduated with flying colors at this home university in China. Tengjun was a visiting student from Zhejiang University in our group from 2023 to 2024. He workedContinue reading

Tag: spiking neural networks

SNN Workshop in Munich

Gitta Kutyniok, the Bavarian AI Chair for Mathematical Foundations of Artificial Intelligence and her team at the LMU organized an inspiring workshop on spiking neural networks with a fantastic lineup of speakers including Wolfgang Maass,Continue reading

SNUFA 2024

We are excited about another year of SNUFA our friendly focus meeting on spiking neural networks that solve real-world problems. Check out our work on SNNs in biologically plausible olfactory memory networks, decoding BMI data,Continue reading

Tengjun, Julia, and Julian win the Ruth Chiquet prize

Congratulations to Tengjun, Julia, and Julian for being awarded the Ruth Chiquet Prize! The award recognizes their work on using spiking neural networks to decode brain signals. “The committee was impressed by the elegance ofContinue reading

High school students build neuronal circuits

At the annual Tage der Genforschung event, the FMI opens its doors to interested high school students from the neighboring cities. This year team members from our group showed the students how to build simpleContinue reading

SNUFA 2023

For all spiking neural network lovers out there, make sure to block November 7-8 in your agenda and submit a 300-word abstract to SNUFA 2023: https://snufa.net/2023 Invited speakers this year include: In previous years weContinue reading

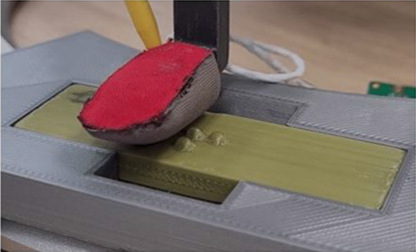

A tactile robotic finger tip and a spiking neural network read Braille

This fun project started as a summer student project at Telluride 2021 led and advised by Chiara Bartolozzi and myself. An incredibly motivated group of summer students taught a robotic fingertip with tactile sensors andContinue reading

Announcing SNUFA 2022

We are happy to announce SNUFA 2022, an online workshop focused on research advances in the field of “Spiking Networks as Universal Function Approximators.” SNUFA 2022 will take place online 9-10 November 2022, European afternoons.Continue reading

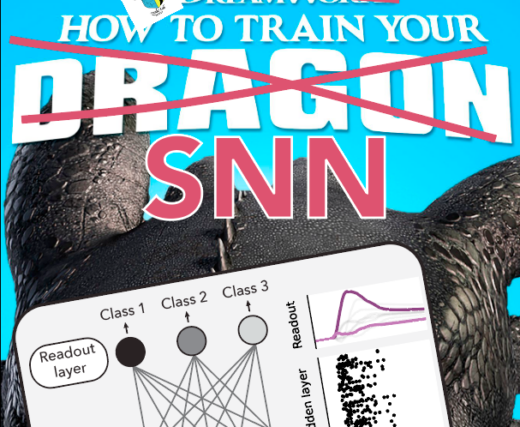

Fluctuation-driven initialization for spiking neural network training

Surrogate gradients are a great tool for training spiking neural networks in computational neuroscience and neuromorphic engineering, but what is a good initialization? In our new preprint co-led by Julian and Julia, we lay outContinue reading

Story on Spiking Neural Networks

Here is a quick shout-out to this nice news story on spiking nets and surrogate gradients by Anil Ananthaswamy, the Science Communicator in Residence at the Simons Institute for the Theory of Computing in Berkeley.Continue reading