This year a group of computational neuroscientists consisting of group leaders and trainees from Basel, Bern, Geneva, Lausanne, and Zurich convened in Crans-Montana for an intensive three-day in-person event with talks and discussions on recentContinue reading

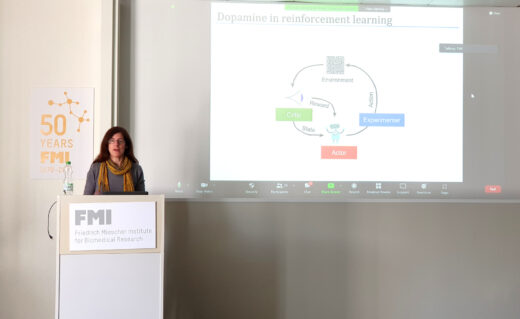

Back to in-person Comp Neuro Seminars in Basel

After almost two-years of pause, the Computational Neuroscience Initiative Basel organized their first in-person seminar with Prof. Adrienne Fairhall (University of Washington). The hybrid event was attended by a broad audience from the Biozentrum, IOB,Continue reading

Quantamagazine covers our work on learning on analog neuromorphic hardware

Allison Whitten wrote a nice article for Quantamagazine about our recent collaboration with Heidelberg on the BrainScaleS-2 neuromorphic chip. Analog hardware faces similar obstacles as the brain when learning: Neuronal heterogeneity means that you won’tContinue reading

Join the team – we are hiring

We are looking for undergrads, PhD students, and post-docs who possess dependable quantitative skills and are curious about how neural networks in the brain learn and process information. Research directions of interest include: We offerContinue reading

Story on Spiking Neural Networks

Here is a quick shout-out to this nice news story on spiking nets and surrogate gradients by Anil Ananthaswamy, the Science Communicator in Residence at the Simons Institute for the Theory of Computing in Berkeley.Continue reading

Eccellenza Fellowship for Friedemann Zenke

We were thrilled to learn that the Swiss National Science Foundation will support our research with an Eccellenza Fellowship. Many thanks to the @snsf_ch, selection panel, and reviewers for their trust. Thanks also to @FMIscienceContinue reading

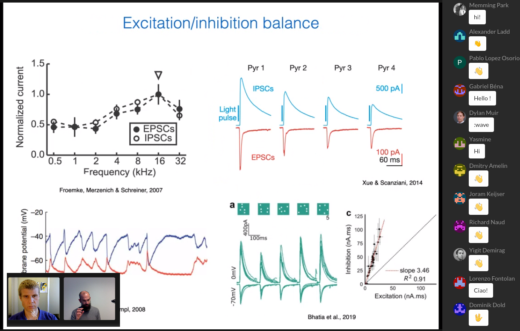

SNUFA 2021 recordings online

Above you see Henning Sprekeler (TU Berlin) talking about the ubiquitous excitation/inhibition balance in the brain during his talk’s intro. Only one of the many good memories a delightful SNUFA 2021 meeting. Thanks again toContinue reading

Announcing the SNUFA 2021 workshop

I am stoked about our second edition of our successful SNUFA workshop on “spiking neural networks as universal function approximators,” on 2-3 November 2021 (we shifted from the original date a week later to avoidContinue reading

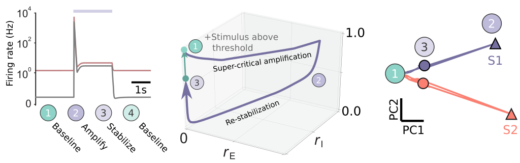

Paper: Nonlinear transient amplification in recurrent neural networks with short-term plasticity

Update (21.12.2021): Now published https://elifesciences.org/articles/71263 I am pleased to share our new preprint: “Nonlinear transient amplification in recurrent neural networks with short-term plasticity” led by Yue Kris Wu in which we study possible mechanisms forContinue reading

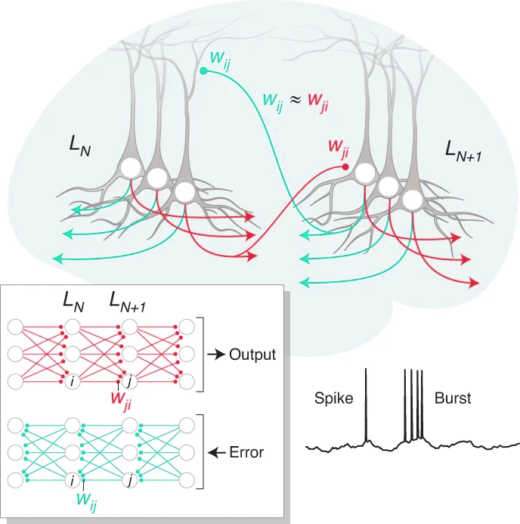

Paper: Burst-dependent synaptic plasticity can coordinate learning in hierarchical circuits

We’re excited to see this published. A new take on spatial credit assignment in cortical circuits based on Richard Naud’s idea on burst multiplexing. A truly collaborative effort lead by Alexandre Payeur and Jordan GuerguievContinue reading