I am eagerly anticipating fun discussions at Cosyne 2019. We have a poster at the main meeting and I will give two talks at the workshops. If biological learning and spiking neural networks tickle your fancy, come along. I will post some details and supplementary material below.

I am eagerly anticipating fun discussions at Cosyne 2019. We have a poster at the main meeting and I will give two talks at the workshops. If biological learning and spiking neural networks tickle your fancy, come along. I will post some details and supplementary material below.

Poster

Thursday, 28 February 2019, 8.30p — Poster Session 1

I-98 Rapid spatiotemporal coding in trained multi-layer and recurrent spiking neural networks. Friedemann Zenke, Tim P Vogels

PDF download

Code: Surrogate gradients in PyTorch

Please try this at home! I have put the early beginnings of a tutorial on how to train simple spiking networks with surrogate gradients using PyTorch here:

https://github.com/fzenke/spytorch

Emre, Hesham, and myself are planning to release a more comprehensive collection of code in the near future to accompany our tutorial paper. Stay tuned!

Talks at the workshops

9:25-9:50 on Monday 4th of March 2019 in the workshop “Continual learning in biological and artificial neural networks”

Title: “Continual learning through synaptic intelligence”

PDF slides download

The talk will be largely based on:

- Zenke, F.*, Poole, B.*, and Ganguli, S. (2017).

Continual Learning Through Synaptic Intelligence.

Proceedings of the 34th International Conference on Machine Learning (ICML), pp. 3987–3995.

fulltext | preprint | code | talk

9:40-10:10 on Tuesday, 5th of March 2019 in the workshop “Why spikes? – Understanding the power and constraints of spiking based computation in biological and artificial neuronal networks” (more)

Title: “Computation in spiking neural networks — Opportunities and challenges”

I will talk about unpublished results and:

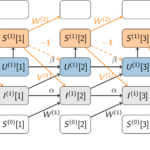

- Neftci, E.O., Mostafa, H., and Zenke, F. (2019).

Surrogate Gradient Learning in Spiking Neural Networks.

ArXiv:1901.09948 [Cs, q-Bio].

preprint - Zenke, F. and Ganguli, S. (2018).

SuperSpike: Supervised learning in multi-layer spiking neural networks.

Neural Comput 30, 1514–1541. doi: 10.1162/neco_a_01086

fulltext | preprint | code