We are looking for a Ph.D. student to work on context-dependent information processing in biologically inspired neural networks. We will investigate the effect of stereotypical circuit motifs and neuromodulation on neural information processing and learningContinue reading

Author: fzenke

The lab at CoSyNe 2021

The lab selfie at Cosyne 2021 clearly looks different than we would have imagined a year ago.

Publication: Visualizing a joint future of neuroscience and neuromorphic engineering

Happy to share this report summarizing the key contributions, discussion outcomes, and future research directions as presented at the spiking neural networks as universal function approximators meeting SNUFA2020. Paper: https://www.cell.com/neuron/fulltext/S0896-6273(21)00009-XAuthor link: https://authors.elsevier.com/a/1cbg3_KOmxI%7E6f

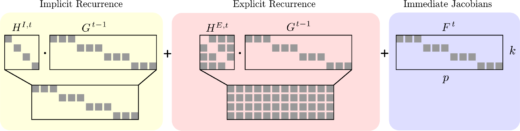

Paper: Brain-Inspired Learning on Neuromorphic Substrates

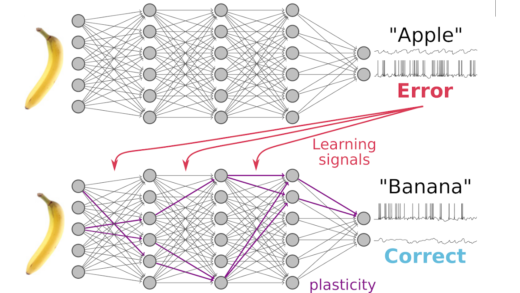

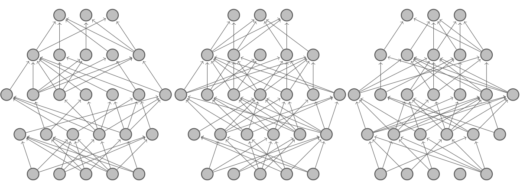

I’m happy to share our new overview paper (https://ieeexplore.ieee.org/document/9317744, preprint: arxiv.org/abs/2010.11931) on brain-inspired learning on neuromorphic substrates in (spiking) recurrent neural networks. We systematically analyze how the combination of Real-Time-Recurrent Learning (RTRL; Williams and Zipser,Continue reading

Kris and Claire present exciting excitation-inhibition work at the Bernstein conference

Both Claire and Kris will present virtual posters about their modeling work in the olfactory system. If you are interested in learning more about what precise EI balance and transient attractor states have to doContinue reading

Hiring: Information processing in spiking neural networks

We are looking for Ph.D. students to work on the computational principles of information processing in spiking neural networks. The project strives to understand computation in the sparse spiking and sparse connectivity regime, in whichContinue reading

Online workshop: Spiking neural networks as universal function approximators

Dan Goodman and myself are organizing an online workshop on new approaches to training spiking neural networks, Aug 31st / Sep 1st 2020. Invited speakers: Sander Bohte (CWI), Iulia M. Comsa (Google), Franz Scherr (TUG), Emre Neftci (UC Irvine),Continue reading

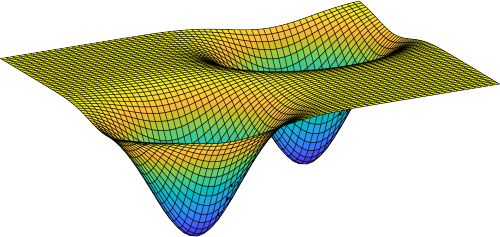

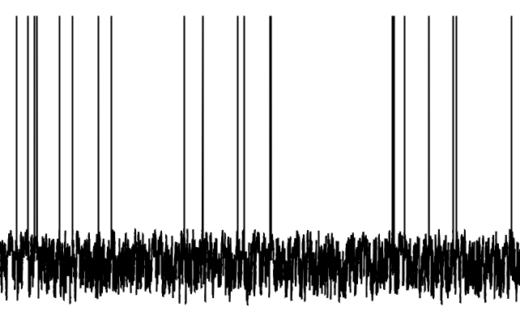

Preprint: The remarkable robustness of surrogate gradient learning for instilling complex function in spiking neural networks

We just put up a new preprint https://www.biorxiv.org/content/10.1101/2020.06.29.176925v1 in which we take a careful look at what makes surrogate gradients work. Spiking neural networks are notoriously hard to train using gradient-based methods due to theirContinue reading

Preprint: The remarkable robustness of surrogate gradient learning for instilling complex function in spiking neural networks

We just put up a new preprint https://www.biorxiv.org/content/10.1101/2020.06.29.176925v1 in which we take a careful look at what makes surrogate gradients work. Spiking neural networks are notoriously hard to train using gradient-based methods due to theirContinue reading

Paper: Finding sparse trainable neural networks through Neural Tangent Transfer

New paper led by Tianlin Liu on “Finding sparse trainable neural networks through Neural Tangent Transfer” https://arxiv.org/abs/2006.08228 (and code) which was accepted at ICML. In the paper we leverage the neural tangent kernel to instantiate sparse neuralContinue reading