We are deeply honored to see our work featured in The Transmitter’s “This Paper Changed My Life.” Huge thanks to Dan Goodman for the kind words – and to our amazing community that keeps pushingContinue reading

Tag: surrogate gradients

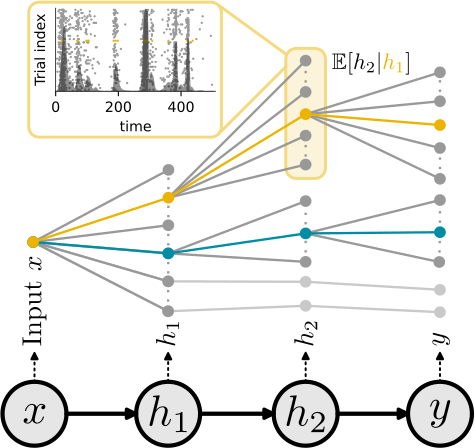

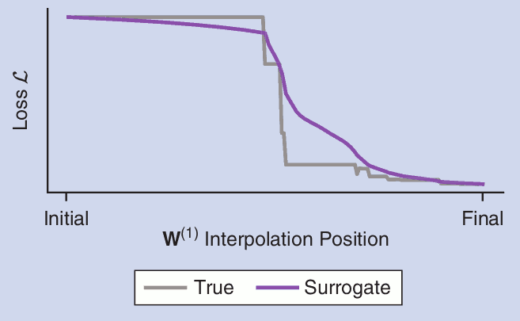

Elucidating the theoretical underpinnings of surrogate gradient learning in spiking neural networks

Surrogate gradients (SGs) are empirically successful at training spiking neural networks (SNNs). But why do they work so well, and what is their theoretical basis? In our new preprint led by Julia, we answer theseContinue reading

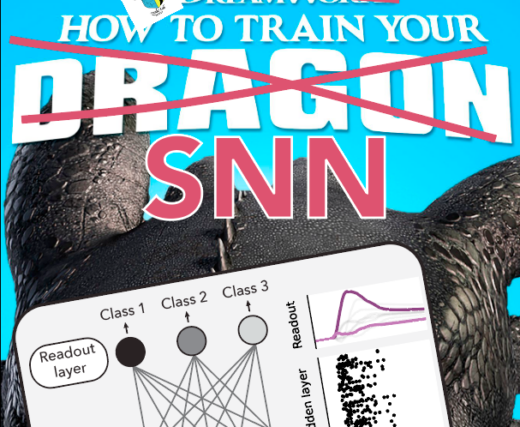

Fluctuation-driven initialization for spiking neural network training

Surrogate gradients are a great tool for training spiking neural networks in computational neuroscience and neuromorphic engineering, but what is a good initialization? In our new preprint co-led by Julian and Julia, we lay outContinue reading

Spektrum der Wissenschaft story on self-calibration in neuromorphic systems

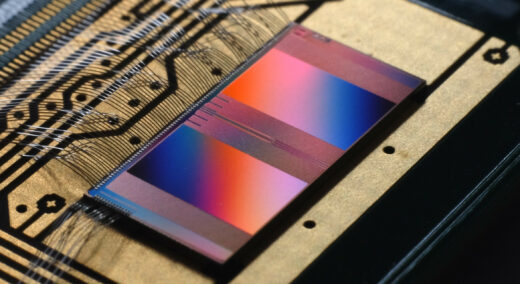

We are very happy that in their current issue Spektrum der Wissenschaft picked up our collaboration story with Uni Heidelberg on self-calibration through surrogate gradient learning in analog neuromorphic systems.

Story on Spiking Neural Networks

Here is a quick shout-out to this nice news story on spiking nets and surrogate gradients by Anil Ananthaswamy, the Science Communicator in Residence at the Simons Institute for the Theory of Computing in Berkeley.Continue reading

Preprint: The remarkable robustness of surrogate gradient learning for instilling complex function in spiking neural networks

We just put up a new preprint https://www.biorxiv.org/content/10.1101/2020.06.29.176925v1 in which we take a careful look at what makes surrogate gradients work. Spiking neural networks are notoriously hard to train using gradient-based methods due to theirContinue reading

Preprint: The remarkable robustness of surrogate gradient learning for instilling complex function in spiking neural networks

We just put up a new preprint https://www.biorxiv.org/content/10.1101/2020.06.29.176925v1 in which we take a careful look at what makes surrogate gradients work. Spiking neural networks are notoriously hard to train using gradient-based methods due to theirContinue reading

Paper: Surrogate gradients for analog neuromorphic computing

Update (22.01.2022): Now published as Cramer, B., Billaudelle, S., Kanya, S., Leibfried, A., Grübl, A., Karasenko, V., Pehle, C., Schreiber, K., Stradmann, Y., Weis, J., et al. (2022). Surrogate gradients for analog neuromorphic computing. PNASContinue reading

Paper: Surrogate Gradient Learning in Spiking Neural Networks

“Bringing the Power of Gradient-based optimization to spiking neural networks” We are happy to announce that our tutorial paper on Surrogate Gradient Learning in spiking neural networks now appeared in the IEEE Signal Processing Magazine.Continue reading

Tutorial on surrogate gradient learning in spiking networks online

Please try this at home! I just put up a beta version of a tutorial showing how to train spiking neural networks with surrogate gradients using PyTorch: https://github.com/fzenke/spytorch Emre, Hesham, and myself are planning toContinue reading